Migrate Hive managed tables to Cloud de Confiance

This document shows you how to migrate your Hive managed tables to Cloud de Confiance.

You can use the Hive managed tables migration connector in the BigQuery Data Transfer Service to seamlessly migrate your tables managed by Hive metastore, supporting both Hive and Iceberg formats from on-premises and cloud environments to Cloud de Confiance. The Hive managed tables migration connector supports files stored in the following data sources:

- HDFS

- Amazon S3

- Azure Blob Storage or Azure Data Lake Storage Gen2

With the Hive managed tables migration connector, you can register your Hive managed tables with Dataproc Metastore, BigLake metastore, or BigLake metastore Iceberg REST Catalog while using Cloud Storage as the file storage.

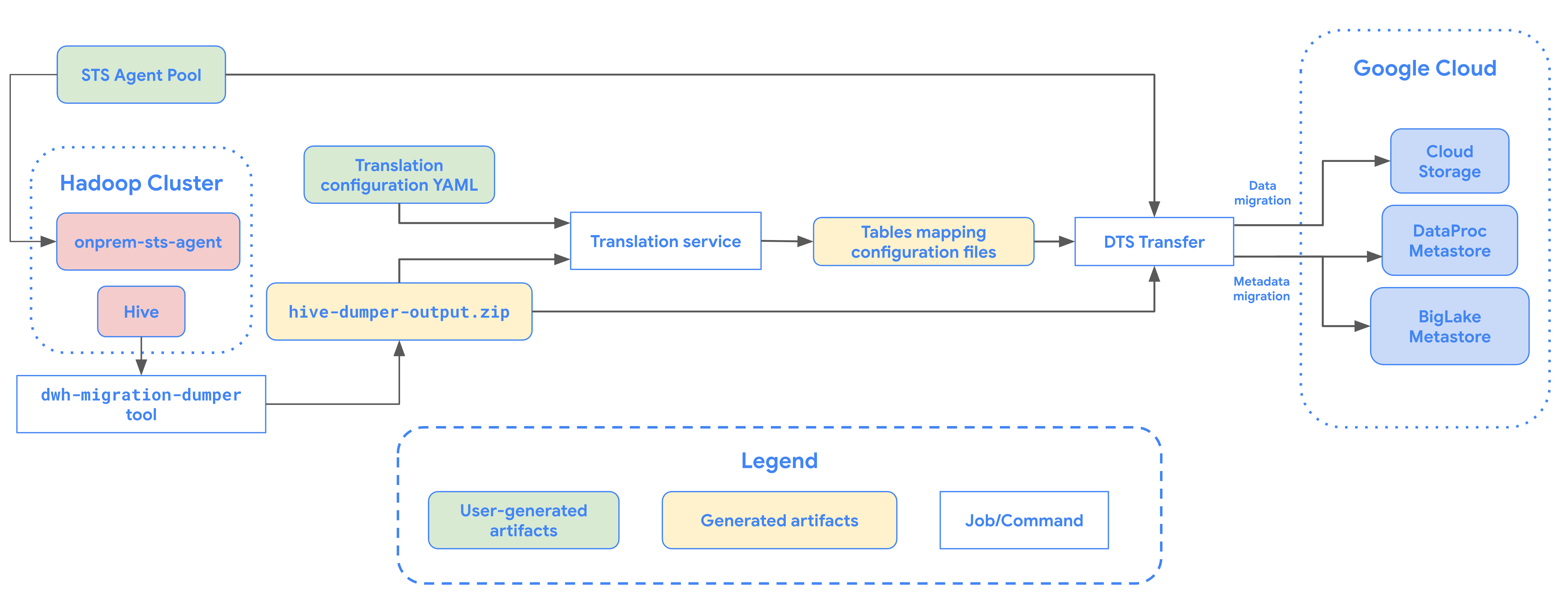

The following diagram provides an overview of the table migration process from Hadoop cluster.

Limitations

Hive managed tables transfers are subject to the following limitations:

- To migrate Apache Iceberg tables, you must register the tables with BigLake metastore to allow write access for open-source engines (such as Apache Spark or Flink), and to allow read access for BigQuery.

- To migrate Hive managed tables, you must register the tables with Dataproc Metastore to allow write access for open-source engines, and to allow read access for BigQuery.

- You must use the bq command-line tool to migrate Hive managed tables to BigQuery.

Before you begin

Before you schedule Hive managed tables transfer, you must perform the following:

Generate metadata file for Apache Hive

Run the dwh-migration-dumper tool to extract metadata

for Apache Hive. The tool generates a file named hive-dumper-output.zip

to a Cloud Storage bucket, referred to in this document as DUMPER_BUCKET.

Enable APIs

Enable the following APIs in your Cloud de Confiance project:

- Data Transfer API

- Storage Transfer API

A service agent is created when you enable the Data Transfer API.

Configure permissions

- Create a service account and grant it the BigQuery Admin role (

roles/bigquery.admin). This service account is used to create the transfer configuration. A service agent (P4SA) is created upon enabling the Data Transfer API. Grant it the following roles:

roles/metastore.metadataOwnerroles/storagetransfer.adminroles/serviceusage.serviceUsageConsumerroles/storage.objectAdmin- If you are migrating metadata for BigLake Iceberg tables, you must also grant the

roles/bigquery.adminrole. - If you are migrating metadata to BigLake metastore Iceberg REST Catalog, you must also grant the

roles/biglake.adminrole.

- If you are migrating metadata for BigLake Iceberg tables, you must also grant the

Grant the service agent the

roles/iam.serviceAccountTokenCreatorrole with the following command:gcloud iam service-accounts add-iam-policy-binding SERVICE_ACCOUNT --member serviceAccount:service-PROJECT_NUMBER@gcp-sa-bigquerydatatransfer.s3ns-system.iam.gserviceaccount.com --role roles/iam.serviceAccountTokenCreator

Configure your Storage Transfer Agent for HDFS data lakes

Required when the file is stored in HDFS. To set up the storage transfer agent required for an HDFS data lake transfer, do the following:

- Configure permissions to run the storage transfer agent on your Hadoop cluster.

- Install Docker on on-premises agent machines.

- Create a Storage Transfer Service agent pool in your Cloud de Confiance by S3NS project.

- Install agents on your on-premises agent machines.

Configure Storage Transfer Service permissions for Amazon S3

Required when the file is stored in Amazon S3. Transfers from Amazon S3 are agentless transfers, which require specific permissions. To configure the Storage Transfer Service for a Amazon S3 transfer, do the following:

- Configure agentless transfer permissions.

- Setup access credentials for AWS Amazon S3.

- Note the access key ID and secret access key after setting up your access credentials.

- Add IP ranges used by Storage Transfer Service workers to your list of allowed IPs if your AWS project uses IP restrictions.

Configure Storage Transfer Service permissions for Microsoft Azure Storage

Required when the file is stored in Azure Blob Storage or Azure Data Lake Storage Gen2. Transfers from Microsoft Azure Storage are agentless transfers, which require specific permissions. To configure the Storage Transfer Service for a Microsoft Azure Storage transfer, do the following:

- Configure agentless transfer permissions.

- Generate a Shared Access Signature (SAS) token for your Microsoft Azure storage account.

- Note the SAS token after generating it.

- Add IP ranges used by Storage Transfer Service workers to your list of allowed IPs if your Microsoft Azure storage account uses IP restrictions.

Schedule Hive managed tables transfer

Select one of the following options:

Console

Go to the Data transfers page in the Cloud de Confiance console.

Click Create transfer.

In the Source type section, select Hive Managed Tables from the Source list.

For Location, select a location type, and then select a region.

In the Transfer config name section, for Display name, enter a name for the data transfer.

In the Schedule options section, do the following:

- In the Repeat frequency list, select an option to specify how often this data transfer runs. To specify a custom repeat frequency, select Custom. If you select On-demand, then this transfer runs when you manually trigger the transfer.

- If applicable, select either Start now or Start at set time, and provide a start date and run time.

In the Data source details section, do the following:

- For Table name patterns, specify HDFS data lake tables to transfer by providing table names or patterns that matches tables in the HDFS database. You must use Java regular expression syntax to specify table patterns. For example:

db1..*matches all tables in db1.db1.table1;db2.table2matches table1 in db1 and table2 in db2.

- For BQMS discovery dump gcs path, enter the path to the bucket that contains the

hive-dumper-output.zipfile that you generated when creating a metadata file for Apache Hive. - Choose the Metastore type from the drop-down list:

DATAPROC_METASTORE: Select this option to store your metadata in Dataproc Metastore. You must provide the URL for the Dataproc Metastore in Dataproc metastore url.BIGLAKE_METASTORE: Select this option to store your metadata in BigLake metastore. You must provide a BigQuery dataset in BigQuery dataset.BIGLAKE_REST_CATALOG: Select this option to store your metadata in the BigLake metastore Iceberg REST catalog.

For Destination gcs path, enter a path to a Cloud Storage bucket to store your migrated data.

Optional: For Service account, enter a service account to use with this data transfer. The service account should belong to the same Cloud de Confiance by S3NS project where the transfer configuration and destination dataset is created.

Optional: You can enable Use translation output to set up a unique Cloud Storage path and database for each table being migrated. To do this, provide the path to the Cloud Storage folder containing the translation results in the BQMS translation output gcs path field. For more information, see Configure Translation output.

- If you specify a Translation output Cloud Storage path, the destination Cloud Storage path and BigQuery dataset will be sourced from the files in that path.

For Storage type, select one of the following options:

HDFS: Select this option if your file storage isHDFS. In the STS agent pool name field, you must provide the name of the agent pool that you created when you configured your Storage Transfer Agent.S3: Select this option if your file storage isAmazon S3. In the Access key ID and Secret access key fields, you must provide the access key ID and secret access key that you created when you set up your access credentials.AZURE: Select this option if your file storage isAzure Blob Storage. In the SAS token field, you must provide the SAS token that you created when you set up your access credentials.

- For Table name patterns, specify HDFS data lake tables to transfer by providing table names or patterns that matches tables in the HDFS database. You must use Java regular expression syntax to specify table patterns. For example:

bq

To schedule Hive managed tables transfer, enter the bq mk

command and supply the transfer creation flag --transfer_config:

bq mk --transfer_config --data_source=hadoop --display_name='TRANSFER_NAME' --service_account_name='SERVICE_ACCOUNT' --project_id='PROJECT_ID' --location='REGION' --params='{"table_name_patterns":"LIST_OF_TABLES", "table_metadata_path":"gs://DUMPER_BUCKET/hive-dumper-output.zip", "target_gcs_file_path":"gs://MIGRATION_BUCKET", "metastore":"METASTORE", "destination_dataproc_metastore":"DATAPROC_METASTORE_URL", "destination_bigquery_dataset":"BIGLAKE_METASTORE_DATASET", "translation_output_gcs_path":"gs://TRANSLATION_OUTPUT_BUCKET/metadata/config/default_database/", "storage_type":"STORAGE_TYPE", "agent_pool_name":"AGENT_POOL_NAME", "aws_access_key_id":"AWS_ACCESS_KEY_ID", "aws_secret_access_key":"AWS_SECRET_ACCESS_KEY", "azure_sas_token":"AZURE_SAS_TOKEN" }'

Replace the following:

TRANSFER_NAME: the display name for the transfer configuration. The transfer name can be any value that lets you identify the transfer if you need to modify it later.SERVICE_ACCOUNT: the service account name used to authenticate your transfer. The service account should be owned by the sameproject_idused to create the transfer and it should have all of the required permissions.PROJECT_ID: your Cloud de Confiance by S3NS project ID. If--project_idisn't supplied to specify a particular project, the default project is used.REGION: location of this transfer configuration.LIST_OF_TABLES: a list of entities to be transferred. Use a hierarchical naming spec -database.table. This field supports RE2 regular expression to specify tables. For example:db1..*: specifies all tables in the databasedb1.table1;db2.table2: a list of tables

DUMPER_BUCKET: the Cloud Storage bucket containing thehive-dumper-output.zipfile.MIGRATION_BUCKET: Destination GCS path to which all underlying files will be loaded.METASTORE: The type of metastore to migrate to. Set this to one of the following values:DATAPROC_METASTORE: To transfer metadata to Dataproc Metastore.BIGLAKE_METASTORE: To transfer metadata to BigLake metastore.BIGLAKE_REST_CATALOG: To transfer metadata to BigLake metastore Iceberg REST Catalog.

DATAPROC_METASTORE_URL: The URL of your Dataproc Metastore. Required ifmetastoreisDATAPROC_METASTORE.BIGLAKE_METASTORE_DATASET: The BigQuery dataset for your BigLake metastore. Required ifmetastoreisBIGLAKE_METASTORE.TRANSLATION_OUTPUT_BUCKET: (Optional) Specify a Cloud Storage bucket for the translation output. For more information, see Using Translation output.STORAGE_TYPE: Specify the underlying file storage for your tables. Supported types areHDFS,S3, andAZURE.AGENT_POOL_NAME: the name of the agent pool used for creating agents. Required ifstorage_typeisHDFS.AWS_ACCESS_KEY_ID: the access key ID from access credentials. Required ifstorage_typeisS3.AWS_SECRET_ACCESS_KEY: the secret access key from access credentials. Required ifstorage_typeisS3.AZURE_SAS_TOKEN: the SAS token from access credentials. Required ifstorage_typeisAZURE.

Run this command to create the transfer configuration and start the Hive managed tables transfer. Transfers are scheduled to run every 24 hours by default, but can be configured with transfer scheduling options.

When the transfer is complete, your tables in Hadoop cluster will be

migrated to MIGRATION_BUCKET.

Data ingestion options

The following sections provide more information about how you can configure your Hive managed tables transfers.

Incremental transfers

When a transfer configuration is set up with a recurring schedule, every subsequent transfer updates the table on Cloud de Confiance by S3NS with the latest updates made to the source table. For example, all insert, delete, or update operations with schema changes are reflected in Cloud de Confiance by S3NS with each transfer.

Transfer scheduling options

By default, transfers are scheduled to

run every 24 hours by default. To configure how often transfers are run,

add the --schedule flag to the transfer configuration, and specify a transfer

schedule using the schedule syntax.

Hive managed tables transfers must have a minimum of 24 hours

between transfer runs.

For one-time transfers, you can add the

end_time flag to the transfer configuration to only run the

transfer once.

Configure Translation output

You can configure a unique Cloud Storage path and database for each migrated table. To do so, perform the following steps to generate a tables mapping YAML file that you can use in your transfer configuration.

Create a configuration YAML file (suffixed with

config.yaml) in theDUMPER_BUCKETthat contains the following:type: object_rewriter relation: - match: relationRegex: ".*" external: location_expression: "'gs://MIGRATION_BUCKET/' + table.schema + '/' + table.name"

- Replace

MIGRATION_BUCKETwith the name of the Cloud Storage bucket that is the destination for your migrated table files. Thelocation_expressionfield is a common expression language (CEL) expression.

- Replace

Create another configuration YAML file (suffixed with

config.yaml) in theDUMPER_BUCKETthat contains the following:type: experimental_object_rewriter relation: - match: schema: SOURCE_DATABASE outputName: database: null schema: TARGET_DATABASE

- Replace

SOURCE_DATABASEandTARGET_DATABASEwith the name of source database name and Dataproc Metastore database or BigQuery dataset depending on the chosen metastore. Ensure that the BigQuery dataset exists if you are configuring the database for BigLake metastore.

For more information about these configuration YAML, see Guidelines to create a configuration YAML file.

- Replace

Generate tables mapping YAML file using the following command:

curl -d '{ "tasks": { "string": { "type": "HiveQL2BigQuery_Translation", "translation_details": { "target_base_uri": "TRANSLATION_OUTPUT_BUCKET", "source_target_mapping": { "source_spec": { "base_uri": "DUMPER_BUCKET" } }, "target_types": ["metadata"] } } } }' \ -H "Content-Type:application/json" \ -H "Authorization: Bearer TOKEN" -X POST https://bigquerymigration.googleapis.com/v2alpha/projects/PROJECT_ID/locations/LOCATION/workflows

Replace the following:

TRANSLATION_OUTPUT_BUCKET: (Optional) Specify a Cloud Storage bucket for the translation output. For more information, see Using Translation output.DUMPER_BUCKET: the base URI for Cloud Storage bucket that contains thehive-dumper-output.zipand configuration YAML file.TOKEN: the OAuth token. You can generate this in the command line with the commandgcloud auth print-access-token.PROJECT_ID: the project to process the translation.LOCATION: the location where the job is processed. For example,euorus.

Monitor the status of this job. When completed, a mapping file is generated for each table in database within a predefined path in

TRANSLATION_OUTPUT_BUCKET.

Orchestrate dumper execution by using the cron command

You can automate incremental transfers by using a cron job to execute the dwh-migration-dumper tool. By automating metadata extraction, you ensure that an up-to-date dump from Hadoop is available for subsequent incremental transfer runs.

Before you begin

Before using this automation script, complete the dumper installation prerequisites. To run the script, you must have the dwh-migration-dumper tool installed and the necessary IAM permissions configured.

Scheduling the automation

Save the following script to a local file. This script is designed to be configured and executed by a

crondaemon to automate the extraction and upload process of dumper output:#!/bin/bash # Exit immediately if a command exits with a non-zero status. set -e # Treat unset variables as an error when substituting. set -u # Pipelines return the exit status of the last command to exit with a non-zero status. set -o pipefail # These values are used if not overridden by command-line options. DUMPER_EXECUTABLE="DUMPER_PATH/dwh-migration-dumper" GCS_BASE_PATH="gs://PATH_TO_DUMPER_OUTPUT" LOCAL_BASE_DIR="LOCAL_BASE_DIRECTORY_PATH" # Function to display usage information usage() { echo "Usage: $0 [options]" echo "" echo "Runs the dwh-migration-dumper tool and uploads its output to provided GCS path." echo "" echo "Options:" echo " --dumper-executable

The full path to the dumper executable. (Required)" echo " --gcs-base-pathThe base GCS path for output files. (Required)" echo " --local-base-dirThe local base directory for logs and temp files. (Required)" echo " -h, --help Display this help message and exit." exit 1 } # This loop processes command-line options and overrides the default configuration. while [[ "$#" -gt 0 ]]; do case $1 in --dumper-executable) DUMPER_EXECUTABLE="$2" shift # past argument shift # past value ;; --gcs-base-path) GCS_BASE_PATH="$2" shift shift ;; --local-base-dir) LOCAL_BASE_DIR="$2" shift shift ;; -h|--help) usage ;; *) echo "Unknown option: $1" usage ;; esac done # This runs AFTER parsing arguments to ensure no placeholder values are left. if [[ "$DUMPER_EXECUTABLE" == "DUMPER_PATH"* || "$GCS_BASE_PATH" == "gs://PATH_TO_DUMPER_OUTPUT" || "$LOCAL_BASE_DIR" == "LOCAL_BASE_DIRECTORY_PATH" ]]; then echo "ERROR: One or more configuration variables have not been set. Please provide them as command-line arguments or edit the script." >&2 echo "Run with --help for more information." >&2 exit 1 fi # Create unique timestamp and directories for this run EPOCH=$(date +%s) LOCAL_LOG_DIR="${LOCAL_BASE_DIR}/logs" mkdir -p "${LOCAL_LOG_DIR}" # Ensures the base and logs directories exist # Define the unique log and zip file path for this run LOG_FILE="${LOCAL_LOG_DIR}/dumper_execution_${EPOCH}.log" ZIP_FILE_NAME="hive-dumper-output_${EPOCH}.zip" LOCAL_ZIP_PATH="${LOCAL_BASE_DIR}/${ZIP_FILE_NAME}" echo "Script execution started. All subsequent output will be logged to: ${LOG_FILE}" # --- Helper Functions --- log() { echo "$(date '+%Y-%m-%d %H:%M:%S') - $@" >> "${LOG_FILE}"} cleanup() { local path_to_remove="$1" log "Cleaning up local file/directory: ${path_to_remove}..." rm -rf "${path_to_remove}" } # This function is called when the script exits to ensure cleanup and logging happen reliably. handle_exit() { local exit_code=$? # Only run the failure logic if the script is exiting with an error if [[ ${exit_code} -ne 0 ]]; then log "ERROR: Script is exiting with a failure code (${exit_code})." local gcs_log_path_on_failure="${GCS_BASE_PATH}/logs/$(basename "${LOG_FILE}")" log "Uploading log file to ${gcs_log_path_on_failure} for debugging..." # Attempt to upload the log file on failure, but don't let this command cause the script to exit. gsutil cp "${LOG_FILE}" "${gcs_log_path_on_failure}" > /dev/null 2>&1 || log "WARNING: Failed to upload log file to GCS." else # SUCCESS PATH log "Script finished successfully. Now cleaning up local zip file...." # Clean up the local zip file ONLY on success cleanup "${LOCAL_ZIP_PATH}" fi log "*****Script End*****" exit ${exit_code} } # Trap the EXIT signal to run the handle_exit function, ensuring cleanup always happens. trap handle_exit EXIT # Validates the dumper log file based on a strict set of rules. validate_dumper_output() { local log_file_to_check="$1" # Check for the specific success message from the dumper tool. if grep -q "Dumper execution: SUCCEEDED" "${log_file_to_check}"; then log "Validation Successful: Found 'Dumper execution: SUCCEEDED' message." return 0 # Success else log "ERROR: Validation failed. The 'Dumper execution: SUCCEEDED' message was not found." return 1 # Failure fi } # --- Main Script Logic --- log "*****Script Start*****" log "Dumper Executable: ${DUMPER_EXECUTABLE}" log "GCS Base Path: ${GCS_BASE_PATH}" log "Local Base Directory: ${LOCAL_BASE_DIR}" # Use an array to build the command safely dumper_command_args=( "--connector" "hiveql" "--output" "${LOCAL_ZIP_PATH}" ) log "Starting dumper tool execution..." log "COMMAND: ${DUMPER_EXECUTABLE} ${dumper_command_args[*]}" "${DUMPER_EXECUTABLE}" "${dumper_command_args[@]}" >> "${LOG_FILE}" 2>&1 log "Dumper process finished." # Validate the output from the dumper execution for success or failure. validate_dumper_output "${LOG_FILE}" # Upload the ZIP file to GCS gcs_zip_path="${GCS_BASE_PATH}/${ZIP_FILE_NAME}" log "Uploading ${LOCAL_ZIP_PATH} to ${gcs_zip_path}..." if [ ! -f "${LOCAL_ZIP_PATH}" ]; then log "ERROR: Expected ZIP file ${LOCAL_ZIP_PATH} not found after dumper execution." # The script will exit here with an error code, and the trap will run. exit 1 fi gsutil cp "${LOCAL_ZIP_PATH}" "${gcs_zip_path}" >> "${LOG_FILE}" 2>&1 log "Upload to GCS successful." # The script will now exit with code 0. The trap will call cleanup and log the script end.Run the following command to make the script executable:

chmod +x PATH_TO_SCRIPT

Schedule the script using

crontab, replacing the variables with appropriate values for your job. Add an entry to schedule the job. The following example runs the script every day at 2:30 AM:# Run the Hive dumper daily at 2:30 AM for incremental BigQuery transfer. 30 2 * * * PATH_TO_SCRIPT \ --dumper-executable PATH_TO_DUMPER_EXECUTABLE \ --gcs-base-path GCS_PATH_TO_UPLOAD_DUMPER_OUTPUT \ --local-base-dir LOCAL_PATH_TO_SAVE_INTERMEDIARY_FILES

When creating the transfer, ensure the

table_metadata_pathfield is set to the same Cloud Storage path you configured forGCS_PATH_TO_UPLOAD_DUMPER_OUTPUT. This is the path containing the dumper output ZIP files.

Scheduling Considerations

To avoid data staleness, the metadata dump must be ready before your scheduled transfer begins. Configure the cron job frequency accordingly.

We recommend performing a few trial runs of the script manually to determine the average time it takes for the dumper tool to generate its output. Use this timing to set a cron schedule that safely precedes your DTS transfer run and ensures freshness.

Monitor Hive managed tables transfers

After you schedule Hive managed tables transfer, you can monitor the transfer job with bq command-line tool commands. For information about monitoring your transfer jobs, see View your transfers.

Track table migration status

You can also run the

dwh-dts-status tool to monitor the status of all transferred tables within

a transfer configuration or a particular database. You can also use the dwh-dts-status

tool to list all transfer configurations in a project.

Before you begin

Before you can use the dwh-dts-status tool, do the following:

Get the

dwh-dts-statustool by downloading thedwh-migration-toolpackage from thedwh-migration-toolsGitHub repository.Authenticate your account to Cloud de Confiance by S3NS with the following command:

gcloud auth application-default loginFor more information, see How Application Default Credentials work.

Verify that the user has the

bigquery.adminandlogging.viewerrole. For more information about IAM roles, see Access control reference.

List all transfer configurations in a project

To list all transfer configurations in a project, use the following command:

./dwh-dts-status --list-transfer-configs --project-id=[PROJECT_ID] --location=[LOCATION]

Replace the following:

PROJECT_ID: the Cloud de Confiance by S3NS project ID that is running the transfers.LOCATION: the location where the transfer configuration was created.

This command outputs a table with a list of transfer configuration names and IDs.

View statuses of all tables in a configuration

To view the status of all tables included in a transfer configuration, use the following command:

./dwh-dts-status --list-status-for-config --project-id=[PROJECT_ID] --config-id=[CONFIG_ID] --location=[LOCATION]

Replace the following:

PROJECT_ID: the Cloud de Confiance by S3NS project ID that is running the transfers.LOCATION: the location where the transfer configuration was created.CONFIG_ID: the ID of the specified transfer configuration.

This command outputs a table with a list of tables, and their transfer status,

in the specified transfer configuration. The transfer status can be one of the

following values: PENDING, RUNNING, SUCCEEDED, FAILED, CANCELLED.

View statuses of all tables in a database

To view the status of all tables transferred from a specific database, use the following command:

./dwh-dts-status --list-status-for-database --project-id=[PROJECT_ID] --database=[DATABASE]

Replace the following:

PROJECT_ID: the Cloud de Confiance by S3NS project ID that is running the transfers.DATABASE:the name of the specified database.

This command outputs a table with a list of tables, and their transfer status,

in the specified database. The transfer status can be one of the

following values: PENDING, RUNNING, SUCCEEDED, FAILED, CANCELLED.